From virtual assistants to self-driving cars, artificial intelligence (AI) has become a part of our daily lives, making things more comfortable and convenient. But as AI technologies continue to advance, they also bring significant privacy risks that could lead to our personal data being compromised if AI is used without proper checks and balances.

Privacy risks can arise in numerous ways, including data breaches, unauthorized access, misuse of data, intentional or unintentional bias and lack of transparency. Additionally, the pace of AI development is so rapid that regulations often lag behind, leaving companies with little guidance on how to protect personal data. This makes it essential for companies to consider the ethical and legal implications of AI technologies and opens the discussion concerning the importance of accountability, robust implementation of data protection measures, and cybersecurity safeguards.

How is AI collecting and using personal data?

As AI software becomes more advanced and intertwined with our lives, the types of personal data that these systems can collect are expanding rapidly. You might not even realize just how much data AI systems are gathering about you as you go about your day.

For years now, we have provided intelligent software apps via our phones, computers, smart speakers, and virtual assistants with a myriad of knowledge; and the list keeps growing - our biometric data such as fingerprints and faces, internet browsing history, health data, personal and work contact details, financial information, shopping preferences and patterns, our comments, likes, dislikes, and even everyday life events such as birthdays, weddings, and vacations.

Our personal data, comprising various sensitive types, collectively creates a comprehensive profile of our identity and interests, which can be unsettling to some degree as we entrust our information to unpredictable and unregulated entities.

User Consent and Data Control

User control over personal data has become a major concern as automated systems become more integrated into everyday life. Without proper user consent, sensitive information may be mishandled or exploited. Issues surrounding consent and control include:

- Inadequate Opt-In/Opt-Out Mechanisms: Many AI systems, including AI-powered search tools, do not provide clear options for users to opt in or out of data collection. Without these choices, users may unknowingly share personal information, increasing the risk of misuse or unauthorized access. Companies must implement transparent consent processes to give users full control over their data preferences.

- Limited Access to Personal Data: Users often have no visibility into what data has been collected about them by AI agents. Without access to this information, it is difficult for individuals to review, correct, or delete their personal data. Offering user dashboards or privacy portals can help address this issue, allowing users to easily manage their data and request changes.

- Lack of Transparency in Data Use: Many systems don’t clearly explain how personal information is handled. This leaves users unsure of who can access their data and why it’s being used. Regular updates and plain-language explanations of data policies can help users understand their rights and make informed decisions.

- Complex Data Policies: Data policies are frequently written in dense, legal jargon, making them difficult for users to understand. This complexity prevents individuals from making informed consent decisions. Simplified language and clearer explanations help people make knowledgeable choices about data sharing.

- Inconsistent Data Deletion Practices: Users who request data deletion may find that their information remains stored in backups or other databases. Inconsistent practices around deletion can lead to privacy breaches if the retained data is later accessed without authorization. Companies need to ensure data is fully removed when users request deletion, eliminating it from all storage locations.

Examples of how AI collects data and process data

AI technologies collect and process an enormous amount of data including personal information, from facial and voice recognition to web activities, and location data. While AI has numerous uses, it also presents several privacy challenges and risks.

- Social media: AI can use highly trained decision-making algorithms on social media platforms, such as Facebook and TikTok, or messaging apps such as Snapchat, to collect and analyze users' behavior, interests, and preferences and to offer highly personalized and targeted content and advertisements. Left unchecked, AI can perpetuate biases, amplify harmful content, and spread manipulated content such as deepfake videos. This can lead to the spread of misinformation, discrimination, and harm to individuals and society.

- Facial recognition technology: This technology is used in a wide range of applications, from security to law enforcement, or even marketing, and helps detect the presence and the looks of individuals. AI systems capture images and videos, process them to extract facial features to create digital representations of faces that can be used to generate comparisons, identifications, or verifications. There are privacy concerns associated with the collection, use and processing of this data.

- Location tracking: AI collects location data through GPS, Wi-Fi, and cellular signals for various purposes, including personalization and business needs. However, the dangers of location tracking lie in the potential misuse of this data. For instance, companies could use location data to infer sensitive personal information such as political affiliation, religious beliefs, and other compromising sensitive information that can lead to identity theft or other potential dangers.

- Voice Assistants: Voice assistants record user conversations, often capturing audio unintentionally. This data is stored to improve recognition accuracy but can include sensitive information. Privacy risks arise when these recordings are accessed without permission or retained for unclear periods. Misuse of this data can lead to unauthorized profiling and potential breaches, raising concerns about the lack of transparency in how user voice data is managed.

- Web Activity Monitoring: AI algorithms track users’ browsing habits, including search history and page visits, often without explicit consent. This data is used to build detailed profiles for personalized advertising, but it also poses privacy issues. Tracking web activities can reveal sensitive information like health concerns or political views. The lack of clear user control over collected data increases the risk of unauthorized sharing and potential exploitation by third parties.

- Smart Devices: Smart home gadgets and IoT devices collect extensive user data, from daily routines to health metrics. AI processes this data for personalized experiences, such as adjusting settings or providing recommendations. However, many of these devices lack robust security, making them vulnerable to hacking. Users often have limited control over data collection and sharing, leading to potential misuse, or unauthorized access by malicious actors.

What are the privacy risks of AI?

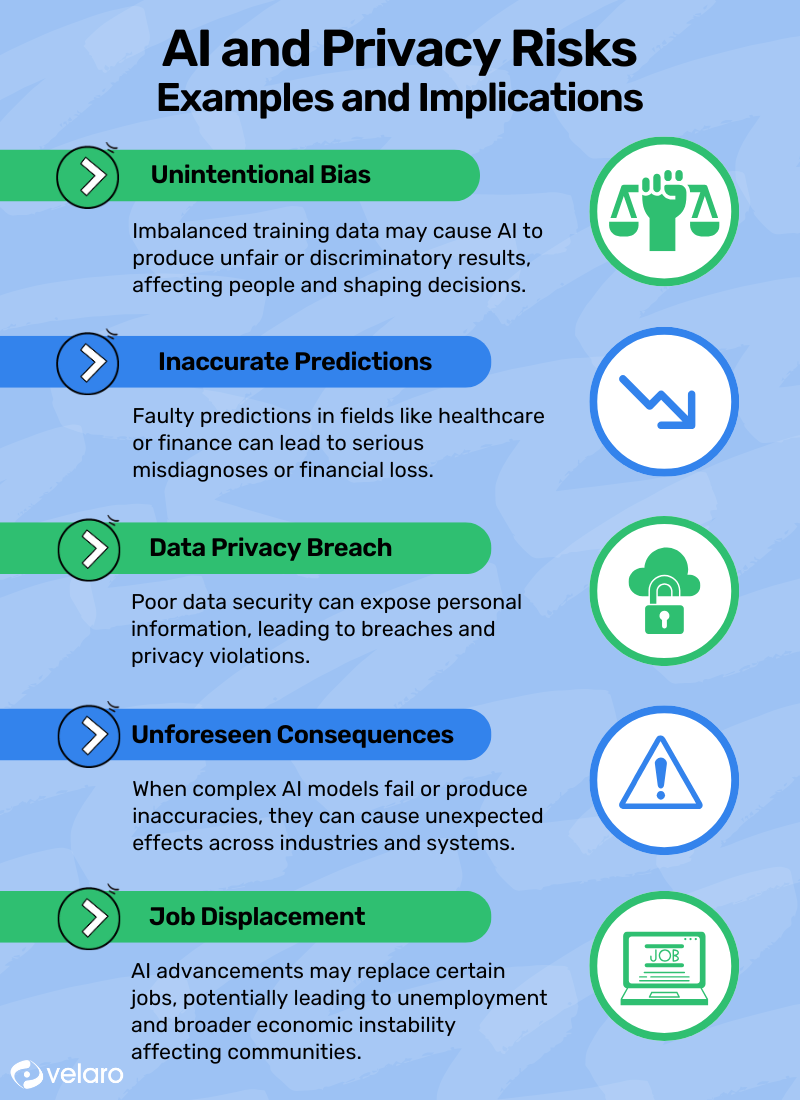

As we delve deeper into the world of artificial intelligence, there is no denying the potential benefits it can bring to our lives. However, as the use of AI becomes more widespread, many experts have raised concerns about AI data privacy risks. Some of which include:

- Unintentional bias: AI systems can exhibit bias based on the data on which they are trained. If the data is biased, the AI system will also be biased, leading to unfair or discriminatory outcomes.

- Inaccurate predictions: AI systems can make inaccurate predictions, which can have serious consequences in fields such as healthcare or finance. For example, if an AI system predicts that a patient is at low risk for a disease when they are actually at high risk, this could result in a missed diagnosis and delayed treatment.

- Unauthorized access to personal data: AI systems often require access to personal data in order to function. IIf this data is not properly secured, it can be accessed by unauthorized individuals or organizations. Users should also be aware of options to turn off AI overviews in certain platforms to minimize privacy risks.

- Unforeseen consequences: With machine learning becoming more complex, advanced, and automated, there is a risk that unforeseen consequences could arise. For example, an AI system used in a transportation system could inadvertently cause accidents or disruptions.

- Job displacement: As AI systems become more capable, there is a growing concern that it may cause an upheaval in the job market by outpacing human training and displacing workers. Unemployment rates may rise, and our economy could face significant disruption as a result.

Mitigating Privacy Risks of AI

What can companies do to reduce AI privacy risks? A couple of things to consider, they need to implement secure data protection policies that secure customer data. This involves employing strict encryption methods, conducting regular safety checks, and implementing strict access controls to prevent unauthorized access to sensitive data. Additionally, companies need to adopt a privacy built-in approach, ensuring that privacy considerations are baked into the design and development of AI technologies from the beginning.

Here are some other key considerations that should be examined further, and that could use more research, guidance, and points of discussion:

Data minimization

Data minimization is a critical aspect of ensuring that only relevant data is used by an AI system, preventing it from accessing and processing any misuse of non-essential personal information.

Encryption

Companies should aim to provide at least one form of encryption defense against malicious sources that aim to steal confidential information that is being processed by AI systems.

Transparent data use policies

Developing documentation and policies that clearly state how AI technologies use personal data and manage visibility in google search results. Organizations in general could benefit from open and honest discussions on the distinct roles and goals of using such information. Everyone will have a better understanding of how the technology works, and perhaps help set up the needed best practices for using such data.

Auditing and monitoring AI systems

Regularly making checks and balances to detect any discrepancies due to untrustworthy actions from internal or external sources and making sure this kind of events do not get overlooked.

Ethical considerations

Training AI to avoid biases could possibly be done in several ways. One strategy is to ensure that the data used to train the AI system is diverse and representative of the population it will be serving. This can involve collecting data from different sources and including a broad range of demographic groups. Additionally, AI systems can be designed to be transparent, so that users can understand how the system is making decisions and whether there is any bias present.

Another approach is to incorporate fairness metrics into the development of AI systems, which can help identify and address any potential biases, although systems like this can still have some issues. Finally, ongoing monitoring and evaluation of AI can help to identify and address any biases that may emerge over time.

The role of transparency and accountability in developing and deploying AI

Artificial Intelligence technologies have transformed the way businesses operate, offering companies new levels of connectivity, efficiency, and convenience. However, the privacy risks of AI must not be ignored. As AI technologies become more advanced, so do the privacy risks they pose. Organizations must take a proactive approach to reduce these emerging privacy challenges and risks, implementing secure data protection principles, privacy-by-design architecture, and ethical considerations.

Additionally, complying with privacy regulations such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA), companies can establish comprehensive privacy policies that outline practices for handling personal data, including the right to access, correct, and delete personal data.

Ultimately, safeguarding personal data from AI privacy challenges should be a top priority for companies today. With AI applications becoming increasingly abundant, taking the necessary steps to protect user data is essential for any business that wants to remain competitive in the digital future.

How Velaro is addressing privacy challenges posed by AI systems to protect personal data

As a company providing AI-powered customer engagement solutions, at Velaro we recognize and understand the importance of protecting personal data from misuse. To ensure privacy and security, we take several steps to protect personal data, such as implementing strict data access controls, robust encryption methods, and regular security audits. We also conduct comprehensive data protection impact assessments to identify and mitigate potential privacy risks associated with our AI systems.